Manuscript accepted on :22-10-2024

Published online on: 04-12-2024

Plagiarism Check: Yes

Reviewed by: Dr. Ana Golez

Second Review by: Dr Nurul Diyana Sanuddin

Final Approval by: Dr. Prabhishek Singh

Rashmi Singh1* , Aryan Chaudhary2

, Aryan Chaudhary2 and Samrat Ray3

and Samrat Ray3

1Amity Institute of Applied Sciences, Amity University Uttar Pradesh, Noida, India.

2Chief Scientific Advisor, Bio Tech Sphere Research, Kolkata, West Bengal, India.

3Dean and Head of International Relations, IIMS, Pune, India.

Corresponding Author E-mail: winrashmi@gmail.com

DOI : https://dx.doi.org/10.13005/bpj/3080

Abstract

Integrating Artificial Intelligence (AI) in medical imaging has revolutionized diagnostics by enhancing accuracy and efficiency. However, challenges related to interpretability, domain shifts, and trust hinder clinical adoption. This study introduces a fuzzy set theoretic based framework to address these issues, focusing on real-world applications. We used aa case study, where fuzzy membership grades (ranging from 0.1 to 0.9) were employed to classify tumor pixels, with a threshold of 0.6 indicating higher likelihood. Weighted average defuzzification techniques were used to integrate parameters such as pixel intensity, grayscale, and texture coefficient. Results demonstrated that pixels exceeding the threshold consistently aligned with tumor regions, validating the framework's reliability. Additionally, we explored domain shifts through feature distribution analysis between source and target datasets, highlighting the need for adaptive models. This research emphasizes the role of fuzzy sets in improving interpretability and adaptability in clinical settings, contributing to AI's trustworthiness and clinical acceptance.

Keywords

AI Adaptation; Domain Shift; Interpretability; Medical Imaging; Trustworthiness

Download this article as:| Copy the following to cite this article: Singh R, Chaudhary A, Ray S. Decoding Challenges using Mathematics of Fuzzy Theory in Interpretability, Shifts, Adaptation and Trust. Biomed Pharmacol J 2025;18(March Spl Edition). |

| Copy the following to cite this URL: Singh R, Chaudhary A, Ray S. Decoding Challenges using Mathematics of Fuzzy Theory in Interpretability, Shifts, Adaptation and Trust. Biomed Pharmacol J 2025;18(March Spl Edition). Available from: https://bit.ly/4fWDyTj |

Introduction

The Amalgamation of Artificial Intelligence into medical imaging streak an everchanging era in diagnostic medicine where technology meets healthcare which promises enhanced patient outcomes and improved clinical workflows. The Combined & crucial role of Fuzzy and AI’s in medical imaging can be seen through their competence in analysing complex data with precision and speed that were previously impassable, heralding a new age of diagnostic precision and efficiency1.However continuous advancement brings some threats, too. The clinical deployment of AI systems in medical imaging accommodates a multifaceted approach that marks interpretability, domain shift, adaption, and trustworthiness to ensure their efficacy and assimilation into patient care (RSNA,2023)

In the examination for interpretability, the black-box nature of AI systems often poses a compelling barrier, and we use fuzzy logic for the same. The acceptance of clinicians to interpret and trust AI decision-making processes is preeminent, mainly when such decisions encounter patient diagnosis and treatment (NCBI, 2023). This trust can only be settled through transparent AI models that provide intuition into their reasoning, thereby providing an environment of informed clinical DM and adequate patient communication

Moreover, the domain shift presents a hefty obstacle. AI models, often trained on peculiar datasets, may fail when applied to new datasets from various clinical environments, a certainty that is prevalent in the distinct landscape of healthcare2,3. Addressing this problem requires a robust adaptation procedure that enables AI systems to maintain steady performance across a spectrum of medical imaging modalities and patient populations, conflicting the need for wide-ranging retraining4.

Lastly, the trustworthiness of AI systems in medical imaging extends beyond accuracy. It encompasses the reliability, fairness, and ethical considerations that are integral to clinical acceptance. Ensuring that AI systems adhere to these principles is not only a technical challenge but also a moral imperative, as the main goal of AI in healthcare is to benefit patient safety and well-being5.

As the field of medical imaging continues to evolve, the need for AI systems that are technologically advanced and the application of interdisciplinary approaches is much required. In the coming section of this article, the historical context of AI is presented. The next section deals with interpretability, presenting the case study using fuzzy sets where the parameters taken as pixel intensity, greyscale, and texture coefficient, and we took the threshold value as 0.6. Further, we analyzed the domain shift with four features. The article concludes with the final conclusion and future studies.

Historical Context of AI in Medical Imaging

Artificial Intelligence (AI) in medical imaging is not a cutting-edge phenomenon but rather an growing one, with its inception dating back to the mid-20th century. The thought of AI was first described in 1950, and it aimed to mimic human cognitive functions. However, the practical implementation of AI in medicine was stalled due to technological inadequacies of initial models. These limitations persisted until the early 2000s, when the advent of deep learning significantly propelled the capabilities of AI systems, thus overcoming many previous barriers and setting the stage for their integration into medical imaging.6

The 1980s marked a resurgence of AI due to international competition, but it was also a period known as the ‘AI winter’ from 1983 to 1993, characterized by a collapse of the market for the computational power needed at the time, leading to a withdrawal of funding. However, research and development in AI did not come to a complete halt and picked up pace thereafter, setting the groundwork for its eventual resurgence and integration into the medical field7.

Current State of the Art and its Limitations

Today, AI applications in medical imaging are widespread and continuously growing, with the field widely recognizing that AI will completely transform medical diagnostics. Current AI models have shown remarkable success in the interpretation of medical images, and their use has been extended to various applications, including the detection of abnormalities and quantification of disease processes8,9.

However, despite these advances, AI in medical imaging is not without its limitations. One such limitation is the ‘black box’ nature of many AI systems, where the reasoning behind AI decisions is not transparent, posing a significant challenge for clinical acceptance. Additionally, while AI aims to replicate human decision-making, it still struggles with issues such as domain shift, where models trained on one set of data fail to generalize to other datasets, often seen in the diverse clinical environments encountered in healthcare10.

The Need for Improved AI Interpretability and Trust in Clinical Settings

The necessity for AI interpretability in medical imaging is paramount, as it is crucial for clinician trust. Clinicians need to comprehend the AI decision-making process to make informed decisions and communicate effectively with patients. The paradigm change that AI is bringing to healthcare is driven by the enlarging availability of healthcare data and enhancements in analytics methods. The future of AI in healthcare is envisioned to address these interpretability issues, thereby increasing trust and reliability in clinical settings11.

Recent advancements in artificial intelligence (AI) have demonstrated the potential to transform medical diagnostics, particularly through techniques like fuzzy logic and explainable AI 12, 13 frameworks. Fuzzy soft set theory, for example, has been extensively reviewed for its applications in medical diagnosis and decision making14, offering a structured approach to handle uncertainty in complex medical data15. Furthermore, explainability and transparency are critical to the adoption of AI in clinical settings, as many AI models, particularly in radiology, struggle with the “black box” problem, which undermines clinicians’ trust16, 17. To address these issues, recent research has emphasized the importance of harmonized data infrastructures and federated learning models, which facilitate secure and efficient data sharing across healthcare systems, enabling the development of robust AI models for medical imaging18. Additionally, innovations in fuzzy logic, such as the intelligent saline control valve, highlight the practical applications of AI in patient care, providing solutions to optimize clinical procedures19.

Interpretability

In machine learning, interpretability is specified as the degree to which a human can envision the reasons behind a model’s decision. This is not only a technical prerequisite but an ethical imperative, especially in sectors where decisions have deep implications on human lives, such as healthcare. Trust in these systems comes from their capacity to bring transparent reasoning for their decisions, which arouses higher acceptance and fidelity to clinical decision-making facilitated by AI.

Approach for Model Interpretability

The quest for interpretability has given acceleration to different techniques. Inherently interpretable models like logistic regression and decision trees offer clarity through their elimination and the direct way they can be charted to human-understandable rules. However, with the arrival of complex models like deep neural networks, the field has moved toward a post-hoc interpretability approach. Model agnostic approaches like LIME quip local interpretability, providing explanations for certain indicators heedless of the model’s complexity. SHAP values boost this by allocating each attribute a value for certain predictions, depiction from cooperative game theory to establish consistency and precision in featuring crucial attribution

Visual techniques are also instrumental; for instance, Class Activation Mapping (CAM) and its variants allow for the envision of regions in the input image that are essential for predictions by a convolutional neural network, providing clinicians with a visual rationale for the AI’s decision.

Interpretability in Medical Imaging

The utilization and significance of interpretability in medical imagining are illustrated through specific case studies. For instance, a study on interpreting AI decisions in mammography has shown that using heatmaps to indicate areas of interest helps radiologists to quickly focus on potential issues and corroborate the AI’s findings with their expertise. In another case, the use of AI to diagnose diabetic retinopathy was greatly enhanced by interpretability techniques that allowed ophthalmologists to understand the basis of the AI’s diagnostic suggestions, thus integrating AI assistance seamlessly into their clinical workflow. Fig.1 simulate the appearance of an AI-generated heatmap on a medical image for illustrative purposes.

|

Figure 1: A medical scan with a heatmap overlay, to illustrate AI interpretability in medical diagnostics |

Materials and Methods

Case Study 1

Consider the problem of an MRI scan analysis for tumor detection. The challenge is to accurately identifying tumor tissues amidst a variety of other factors.

In this example, an MRI scan with 10 distinct pixels, each characterized by four parameters: Membership Grade, Pixel Intensity, Grey Scale, and Texture Coefficient is taken into consideration. The task is to analyze these pixels using Fuzzy Logic to determine the likelihood of each pixel being part of a tumor.

We assume 10 pixels, each with four parameters.

Each pixel’s membership grade (0.1 to 0.9) reflects its likelihood of being tumor tissue.

A threshold of 0.6 indicates higher tumor likelihood.

Pixel Intensity, Grayscale, and Texture Coefficient are integrated with membership grades for tumor detection.

The Weighted Average defuzzification method computes a single value for each pixel.

Higher defuzzified values indicate a greater likelihood of tumor presence.

Table 1: Parameter influence on the final defuzzified value

|

Pixel |

Membership Grade |

Pixel Intensity |

Grey Scale |

Texture Coefficient |

Threshold (Grade > 0.6) |

Defuzzified Value |

|

1 |

0.10 |

35 |

120 |

0.30 |

0.00 |

0.000000 |

|

2 |

0.30 |

45 |

130 |

0.35 |

0.00 |

0.000000 |

|

3 |

0.50 |

60 |

145 |

0.40 |

0.00 |

0.000000 |

|

4 |

0.20 |

20 |

110 |

0.20 |

0.00 |

0.000000 |

|

5 |

0.40 |

40 |

125 |

0.25 |

0.00 |

0.000000 |

|

6 |

0.60 |

55 |

150 |

0.45 |

0.00 |

0.000000 |

|

7 |

0.80 |

80 |

200 |

0.60 |

0.80 |

0.141933 |

|

8 |

0.70 |

75 |

190 |

0.55 |

0.70 |

0.101391 |

|

9 |

0.90 |

90 |

210 |

0.65 |

0.90 |

0.204334 |

|

10 |

0.85 |

85 |

205 |

0.70 |

0.85 |

0.191607 |

Pixels 7, 8, 9, and 10, with defuzzified values of 0.141933, 0.101391, 0.204334, and 0.191607, respectively, suggest a likelihood of being part of a tumor.

Case Study 2

Consider two domains of medical imaging data for tumor detection, Domain A (Source) and Domain B (Target), each domain comprises data points with four features: Feature 1 (e.g., tumor size), Feature 2 (e.g., texture), Feature 3 (e.g., shape irregularity), and Feature 4 (e.g., presence of specific markers).

The challenge is to adapt an AI model trained on Domain A to maintain its diagnostic accuracy when applied to Domain B, addressing the domain shift numerically represented by differences in feature distributions and associations with tumor characteristics.

In this example (hypothetical data), we use fuzzy logic for Feature1 classification, and apply domain adaptation techniques for Feature 2, Feature3, and Feature4.

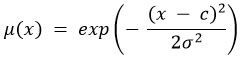

The Fuzzy Logic Parameters used are: benign threshold for Feature 1 is 5; malignant threshold for Feature 1 is 7. The domain shift is shown in Fig. 2.

Table 2: Domain A (Source)

|

Feature 1 |

Benign Grade |

Malignant Grade |

Feature 2 |

Feature 3 |

Feature 4 |

|

4.1 |

1.00 |

0.00 |

2.08 |

7.42 |

7.85 |

|

4.42 |

0.89 |

0.11 |

2.59 |

7.64 |

7.99 |

|

4.74 |

0.78 |

0.22 |

3.11 |

7.85 |

8.14 |

|

5.07 |

0.67 |

0.33 |

3.62 |

8.07 |

8.29 |

|

5.39 |

0.56 |

0.44 |

4.13 |

8.29 |

8.44 |

|

5.71 |

0.44 |

0.56 |

4.65 |

8.50 |

8.58 |

|

6.03 |

0.33 |

0.67 |

5.16 |

8.72 |

8.73 |

|

6.36 |

0.22 |

0.78 |

5.67 |

8.94 |

8.88 |

|

6.68 |

0.11 |

0.89 |

6.19 |

9.15 |

9.02 |

|

7.00 |

0.00 |

1.00 |

6.70 |

9.37 |

9.17 |

Table 3: Domain B (Target)

|

Feature 1 |

Benign Grade |

Malignant Grade |

Feature 2 |

Feature 3 |

Feature 4 |

|

7.1 |

0.0 |

1.0 |

13.07 |

13.96 |

8.18 |

|

7.37 |

0.0 |

1.0 |

13.25 |

14.03 |

8.90 |

|

7.63 |

0.0 |

1.0 |

13.44 |

14.11 |

9.61 |

|

7.9 |

0.0 |

1.0 |

13.62 |

14.18 |

10.33 |

|

8.17 |

0.0 |

1.0 |

13.80 |

14.25 |

11.05 |

|

8.43 |

0.0 |

1.0 |

13.99 |

14.33 |

11.76 |

|

8.7 |

0.0 |

1.0 |

14.17 |

14.40 |

12.48 |

|

8.97 |

0.0 |

1.0 |

14.35 |

14.47 |

13.20 |

|

9.23 |

0.0 |

1.0 |

14.54 |

14.55 |

13.91 |

|

9.5 |

0.0 |

1.0 |

14.72 |

14.62 |

14.63 |

|

Figure 2: Domain shift across all four features, highlighting the differences in distributions and relationships that characterize the two domains. The blue dots (Domain A) and red crosses (Domain B) |

Case Study 3 : Application of Intuitionistic Fuzzy Sets in Medical Imaging for Tumor Detection

Step 1: Data Preprocessing

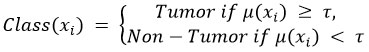

Normalization of Pixel Intensity Values

Normalize pixel intensity values I to a standard range, typically [0, 1], using the formula:

Segmentation of Medical Images

Apply segmentation algorithms such as Otsu’s method or k-means clustering to identify Regions of Interest (ROIs) within the medical images.

Step 2: Fuzzy Logic for Interpretability

Definition of Fuzzy Membership Functions

Define membership functions μ for key features x (e.g., pixel intensity, grayscale, texture coefficient). For example, a Gaussian membership function can be used:

Calculation of Membership Grades

Compute membership grades μ(xi) for each pixel i based on the defined fuzzy membership functions.

Threshold Application for Classification

Apply a threshold τ to classify pixels as part of the tumor or not:

Step 3: Domain Adaptation

Identification of Source and Target Domains

Identify the source domain Ds (training dataset) and target domain DT (new clinical dataset).

Transfer Learning

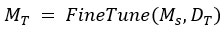

Fine-tune the pre-trained model Ms, from the source domain using labeled data from the target domain:

Domain-Invariant Feature Learning

Employ domain-invariant feature learning techniques to learn features f that are robust across both domains Ds and DT :

where D is a domain discriminator.

Step 4: Model Evaluation

Cross-Validation on Target Domain

Perform k-fold cross-validation on the target domain DT to evaluate model performance. Calculate metrics such as accuracy, precision, recall, and F1 score.

Interpretability Assessment

Use techniques like Class Activation Mapping (CAM) or LIME for visual explanations.

Step 5: Incremental Data Integration:

Continuously integrate new data D(new) into the model to update its parameters:

Results and Discussion

The results from the case studies illustrate the effectiveness of the fuzzy logic framework in addressing key challenges in AI-based medical imaging, such as interpretability and domain shift. Fuzzy logic provides a transparent way to manage complex datasets, making it easier for clinicians to trust AI outputs. The weighted average defuzzification method ensured the accurate classification of tumor pixels, with higher defuzzified values correlating with tumor likelihood.

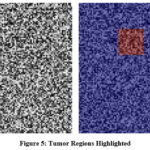

For case study 1, the analysis helps in understanding and enhancing interpretability in AI for medical imaging, demonstrating a practical application of fuzzy logic in a complex, real-world scenario. Intuitionistic fuzzy sets extend traditional fuzzy sets by incorporating a degree of hesitancy, which offers a more nuanced way to handle uncertainty and improve the robustness of AI models. The following pictorial representation in Fig. 3 and 4 gives a visual understanding of case study 3.

Normalization of pixel intensity values to a standard range [0, 1].

|

Figure 3: Normalization of Pixel Intensity Values |

Segmentation of MRI scans to identify Regions of Interest (ROIs).

|

Figure 4: Segmentation of Medical Images |

Intuitionistic fuzzy membership, non-membership, and hesitancy functions for key features.

Calculation of membership, non-membership, and hesitancy grades for each pixel.

Table 4: Calculation of Membership, Non-membership, and Hesitancy Grades.

|

Pixel Value |

Membership (μ) |

Non-membership (ν) |

Hesitancy (π) |

|

0.1 |

0.0 |

1.0 |

0.0 |

|

0.3 |

0.14 |

0.86 |

0.0 |

|

0.5 |

1.0 |

0.0 |

0.0 |

|

0.7 |

0.14 |

0.86 |

0.0 |

|

0.9 |

0.0 |

1.0 |

0.0 |

Application of thresholds to classify pixels as tumor or non-tumor.

Table 5: Threshold Application for Classification

|

Pixel Value |

Membership (μ) |

Non-membership (ν) |

Hesitancy (π) |

Classification |

|

0.1 |

0.0 |

1.0 |

0.0 |

Non-Tumor |

|

0.3 |

0.14 |

0.86 |

0.0 |

Non-Tumor |

|

0.5 |

1.0 |

0.0 |

0.0 |

Tumor |

|

0.7 |

0.14 |

0.86 |

0.0 |

Non-Tumor |

|

0.9 |

0.0 |

1.0 |

0.0 |

Non-Tumor |

Domain adaptation process to ensure robustness across different clinical environments. Cross-validation and evaluation of model performance using various metrics. Continuous learning and performance monitoring to maintain optimal model accuracy. Classification results of pixels in the MRI scan using Intuitionistic fuzzy sets is shown in Fig. 5.

|

Figure 5: Tumor Regions Highlighted |

Alternative Methods and Hypotheses

While fuzzy logic enhances interpretability, other AI techniques, such as explainable neural networks and decision trees, also aim to provide transparent decision-making. Methods like SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-Agnostic Explanations) offer feature-level insights but may struggle with generalization across diverse datasets. Additionally, non-fuzzy models may outperform fuzzy logic in cases where pixel-level precision is less critical, such as in broad pattern recognition tasks.

Limitations

One limitation of our approach is the reliance on predefined membership functions and threshold values, which may need adjustment for different imaging modalities. Moreover, the framework has been tested primarily on a single case study with simulated data, meaning further validation on real-world clinical datasets is required for broader applicability.

Uncertainties and Sensitivity of Results

The sensitivity of the fuzzy logic framework to changes in membership grades and defuzzification parameters presents a potential source of variability. Small variations in these parameters may alter the classification outcome, highlighting the need for robust parameter optimization. Additionally, domain shift remains a challenge, as the model’s accuracy may decrease in clinical settings with significant differences in equipment or patient demographics. Continuous fine-tuning and adaptive learning mechanisms are necessary to mitigate these effects.

Implications for Clinical Practice

The application of fuzzy logic provides a promising pathway for improving the interpretability and trustworthiness of AI models in medical imaging. However, collaboration between data scientists and clinicians will be essential to tailor these models to specific clinical workflows. Future work should focus on integrating fuzzy logic with other adaptive AI techniques to further enhance model robustness and generalizability across diverse clinical environments.

Domain Shift

Understanding Domain Shift in AI

Domain shift refers to the switch in data distribution that a machine learning model encounters when applied to new environments or scenarios different from the training data. This phenomenon is critical in AI, as it can remarkably impact a model’s performance due to the distinction between the source (training) and target (application) domains.

Impacts on Medical Imaging Analysis

In medical imaging, domain shift can be extremely challenging, as models trained on data from one set of equipment or demographic might not execute well when applied to data from another, due to difference in image acquisition protocols, patient populations, or disease prevalence. This can lead to decreased precision in automated diagnosis systems and likely impact patient outcomes.

To address domain shift, various domain adaptation strategies have been developed. These include transfer learning, where a model trained on one domain is redesign to another, and domain-invariant feature learning, which targets to learn aspect that are robust to the switch between domains. Additionally, data augmentation and synthetic data generation can be employed to simulate a variety of domain shifts during the training process, strengthen the model’s generalizability.

Adaptive AI Systems in Medical Imaging

Continuous Learning and Model Updating

In medical imaging, adaptive AI systems must incorporate machine learning techniques such as online learning, where the model is incrementally trained on new data, or transfer learning, where a pre-trained model is fine-tuned with data from a new domain. This enables the model to reshape to new patterns in the data, such as novel imaging biomarkers or advancing disease presentations.

Cross-Modality and Cross-Institutional Transformation

These arrangements require deploying domain variation techniques to ease issues resulting from contrast in imaging modalities example CT, MRI, PET and institutional practices. This may encompass the use of GANs- generative adversarial networks to execute image-to-image translation, authenticating model robustness and transferability across numerous imaging technologies and healthcare framework.

Trustworthiness

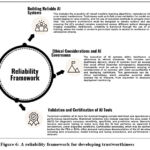

Reliability in AI systems, particularly in medical imaging, needs a multifaceted approach, as depicted by Fig 6.

|

Figure 6: A reliability framework for developing trustworthiness |

Current Regulatory Landscape

A complex international guidelines, national laws, and industry standards defines the ongoing regulatory landscape for AI in medical imaging. In the U.S., the Food and Drug Administration (FDA) give oversight through its regulatory framework for Software as a Medical Device (SaMD), which includes AI and machine learning-based software. The FDA’s risk-based approach target on the software’s intended use. In Europe, the European Union’s Medical Device Regulation (MDR) classifies and regulates AI as a medical device, with an identical risk-based approach. Both frameworks demand strict clinical evaluation, post-market surveillance, and a quality management system aligned with standards such as ISO 13485. Various factors affecting this is shown in Fig 7. AI is not a threat but a tremendous opportunity to assist radiologists in quickening the backend processes, improving workflow, increasing accuracy, and quantification of findings20. Borys K studied a common ground for cross-disciplinary understanding and exchange across disciplines between deep learning builders and healthcare professionals21.

|

Figure 7: Various factors in regulatory and security landscape |

Conclusion

In this study, we used a fuzzy based framework to address key challenges in AI-driven medical imaging, particularly in improving interpretability, handling domain shifts, and enhancing trustworthiness in MRI-based tumor detection. Our case study demonstrated that applying fuzzy membership grades and weighted average defuzzification techniques can effectively classify tumor pixels, offering clinicians a more transparent decision-making tool compared to conventional AI methods. This approach underscores the potential of fuzzy logic to bridge the gap between AI’s ‘black-box’ nature and the need for explainability in clinical environments.

However, it is essential to recognize that this research was limited to a single case study focusing on MRI scans. The predefined fuzzy membership functions and threshold values may need to be adjusted for different imaging modalities and clinical datasets. Furthermore, while the framework showed promise in managing domain shifts between source and target datasets, its robustness across varied real-world clinical settings remains to be tested.

Future research should focus on extending this framework to larger and more diverse datasets, testing it across other medical imaging techniques such as CT and PET scans, and refining the fuzzy parameters to suit different clinical environments. By integrating fuzzy logic with adaptive learning models and domain-invariant feature extraction, the applicability of this approach could be broadened significantly. These advancements would contribute not only to better AI interpretability but also to the establishment of more trustworthy, reliable AI systems in medical diagnostics. The future of AI in medical imaging holds promising research avenues, including:

In both case studies, a number of additional features may be given, and the threshold value can be adjusted and varied from case to case.

Focusing on developing interpretable AI systems that provide transparency in decision-making processes and ethical considerations in deployment.

Investigating the use of AI in integrating different imaging modalities to provide a comprehensive view of patient pathology.

While fuzzy logic offers a promising pathway for addressing some of the core challenges in AI-based medical imaging, further empirical studies are required to validate its generalizability and clinical utility. The framework presented here serves as a foundational step toward developing interpretable and trustworthy AI solutions that can be seamlessly integrated into clinical workflows, ultimately benefiting patient care and clinical decision-making.

Acknowledgments

The authors would like to express their sincere gratitude to their organization for providing the necessary resources and support to conduct this research. Special thanks are due to Rashmi Singh (Amity Institute of Applied Sciences, Amity University Uttar Pradesh, Noida, India), Aryan Chaudhary (Bio Tech Sphere Research, India), and Samrat Ray (IIMS Pune) for their valuable contributions.

Funding sources

The author(s) received no financial support for the research, authorship, and/or publication of this article.

Conflict of Interest

The author(s) do not have any conflict of interest.

Data Availability Statement

This statement does not apply to this article

Ethics Statement

This research did not involve human participants, animal subjects, or any material that requires ethical approval

Informed Consent Statement

This study did not involve human participants, and therefore, informed consent was not required

Clinical Trial Registration

This research does not involve any clinical trials

Author Contributions

Rashmi Singh: Conceptualization, Methodology, Writing – Original Draft Preparation, Visualization, Validation, Data Interpretation.

Aryan Chaudhary: Conceptualization, Formal Analysis, Writing – Review & Editing, Validation, Resources.

Samrat Ray: Investigation, Ethics, Writing – Review & Editing, Resources.

References

- Oren O, Gersh BJ, Bhatt DL. Artificial intelligence in medical imaging: switching from radiographic pathological data to clinically meaningful endpoints. Lancet Digit Health. 2020; 2(9): E486-E488.

CrossRef - Prevedello LM, Halabi SS, Shih G, Carol C. Wu, Kohli M, Chokshi F, Erickson B, Cramer J, Andriole K, Adam E. Flanders Challenges related to artificial intelligence research in medical imaging and the importance of image analysis competitions. Radiol Artif Intell. 2019;1(1): 1-7.

CrossRef - Tang X, The role of artificial intelligence in medical imaging research, BJR Open, 2019; 2(1): 1-5.

CrossRef - Panayides AS, Amini A, Filipovic ND, Sharma A, Tsaftaris SA, Young A, Foran D, Do N, Golemati S, Kurc T, Huang K, Nikita KS, Veasey BP, Zervakis M, Saltz JH and Pattichis CS, AI in medical imaging informatics: current challenges and future directions, IEEE J Biomed Health Inform, 2020; 24(7): 1837-1857.

CrossRef - Wang S, Cao G, Wang Y, Liao S, Wang Q, Shi J, Li C and Shen D, Review and prospect: artificial intelligence in advanced medical imaging, Front Radiol, 2021; 1: 781868; 1-18.

CrossRef - Saw SN and Ng KH, Current challenges of implementing artificial intelligence in medical imaging, Phys Med, 2022; 100: 12-17.

CrossRef - Gupta NS and Kumar P, Perspective of artificial intelligence in healthcare data management: a journey towards precision medicine, Comput Biol Med, 2023; 162: 107051: 1-19.

CrossRef - Al Kuwaiti A, Nazer K, Al-Reedy A, Al-Shehri S, Al-Muhanna A, Subbarayalu AV, Al Muhanna D and Al-Muhanna FA, A review of the role of artificial intelligence in healthcare, J Pers Med, 2023; 13(6): 951, 1-22.

CrossRef - Tekkeşin Aİ, Artificial intelligence in healthcare: past, present and future, Anatol J Cardiol, 2019; 22(Suppl 2): 8-9.

CrossRef - Schaefferkoetter J, Evolution of AI in medical imaging, In: Veit-Haibach P, Herrmann K, eds, Artificial Intelligence/Machine Learning in Nuclear Medicine and Hybrid Imaging, Springer International Publishing, 2022: Chapter 4, 37-56.

CrossRef - Rajpurkar P and Lungren MP, AI in medicine: the current and future state of AI interpretation of medical images, N Engl J Med, 2023; 388(21): 1981-1990.

CrossRef - Singh R, Bhardwaj N and Islam SMN, Applications of mathematical techniques to artificial intelligence: Mathematical methods, algorithms, computer programming and applications, In: Advances on Mathematical Modeling and Optimization with Its Applications, Springer, 2024: 152-169.

CrossRef - Singh R, Bhardwaj N and Islam SMN, The role of mathematics in data science: Methods, algorithms, and computer programs, Bentham Science Publishers, 2023, 1-23.

CrossRef - Singh R, Khurana K and Khandelwal P, Decision-making in mask disposal techniques using soft set theory, In Lecture Notes in Electrical Engineering, 2023; 968: 649-661.

CrossRef - Singh R and Bhardwaj N, Fuzzy soft set theory applications in medical diagnosis: A comprehensive review and the roadmap for future studies, New Math Nat Comput, 2024; 20(1): 1-23.

CrossRef - Marey A, Arjmand P, Alerab A, Eslami M, Saad A, Sanchez N & Umair M, Explainability, transparency and black box challenges of AI in radiology: impact on patient care in cardiovascular radiology, Egypt J Radiol Nucl Med, 2024; 55(183): 1-14.

CrossRef - Vimbi V, Shaffi N and Mahmud M, Interpreting artificial intelligence models: a systematic review on the application of LIME and SHAP in Alzheimer’s disease detection, Brain Inform, 2024; 11:10, 1-29.

CrossRef - Kondylakis H, Kalokyri V, Sfakianakis S, Marias K, Tsiknakis M, Jimenez-Pastor A, Camacho-Ramos E, Blanquer E, Segrelles J, López-Huguet S, Barelle C, Kogut-Czarkowska M, Tsakou G, Siopis N, Sakellariou Z, Bizopoulos P, Drossou V, Lalas A, Votis K, Mallol P, Bonmati L, Alberich L, Seymour K, Boucher S, Ciarrocchi E, Fromont L, Rambla J, Harms A, Gutierrez A, Martijn P. A. Starmans, Fred Prior, Josep Ll. Gelpi , Lekadir K, Data infrastructures for AI in medical imaging: experiences of five EU projects, Eur Radiol Exp, 2024; 7:20, 1-13.

CrossRef - Ahmed A and Hussein M, Intelligent saline controlling valve based on fuzzy logic, J Eng Appl Sci, 2024; 71:163, 1-21.

CrossRef - Kohli A, AI in medical imaging: current and future status-artificial intelligence or augmented imaging? Indian J Radiol Imaging, 2021; 31(3): 525-526.

CrossRef - Borys K, Schmitt YA, Nauta M, Seifert C, Krämer N, Friedrich CM and Nensa F, Explainable AI in medical imaging: an overview for clinical practitioners – beyond saliency-based XAI approaches, Eur J Radiol, 2023; 162: 110786, 1-11.

CrossRef