Swati Shilaskar1* , Shripad Bhatlawande1

, Shripad Bhatlawande1 , Mayur Talewar1

, Mayur Talewar1 , Sidhesh Goud1

, Sidhesh Goud1 , Soham Tak1

, Soham Tak1 , Sachi Kurian2

, Sachi Kurian2 and Anjali Solanke3

and Anjali Solanke3

1Department of E and TC Engineering, Vishwakarma institute of Technology, Pune, India

2Department of Biomedical Engineering, Rutgers University School of Engineering, New Brunswick, USA

3Department of E and TC Engineering, Marathwada Mitramandal College of Engineering, Pune, India

Corresponding Author E-mail: swati.shilaskar@vit.edu

DOI : https://dx.doi.org/10.13005/bpj/3109

Abstract

Breast cancer remains a leading cause of mortality among women worldwide, necessitating accurate and efficient diagnostic methods. This study leverages ultrasound imaging for the early detection of breast tumors, employing the advanced deep learning models: VGG-16 convolutional neural network (CNN) to classify images and the UNet architecture for tumor segmentation. The VGG-16 model, known for extracting high-level features, achieved a classification accuracy of 90%, while UNet reached an impressive 98% accuracy in segmenting tumor regions. The integration of these models provides a robust framework for breast cancer diagnosis, potentially enhancing clinical workflows and facilitating accurate treatment planning.

Keywords

Breast Tumors; Convolutional Neural Networks; Deep Learning; U-Net; VGG-16

Download this article as:| Copy the following to cite this article: Shilaskar S, Bhatlawande S, Talewar M, Goud S, Tak S, Kurian S, Solanke A. Classification and Segmentation of Breast Tumor Ultrasound Images using VGG-16 and UNet. Biomed Pharmacol J 2025;18(1). |

| Copy the following to cite this URL: Shilaskar S, Bhatlawande S, Talewar M, Goud S, Tak S, Kurian S, Solanke A. Classification and Segmentation of Breast Tumor Ultrasound Images using VGG-16 and UNet. Biomed Pharmacol J 2025;18(1). Available from: https://bit.ly/4h7g6U3 |

Introduction

Breast cancer is the most commonly diagnosed cancer in women, accounting for 2.3 million new cases and approximately 670,000 deaths globally in 2022, according to the World Health Organization (WHO). This implies the dire need for early diagnosis, which will help reduce mortality rates through appropriate and timely interventions 1. General ultrasound imaging is a diagnostic technique that is widely used and non-invasive. It provides real-time visualization of the tissues, making it crucial for identifying and characterizing breast tumors2,3.

The authors propose a novel classification-segmentation methodology for breast tumors using advanced deep learning techniques. This paper proposes a new dual-model system to capitalize on the strengths of two architectures-VGG-16 CNN for the classification of the ultrasound images of the breast and UNet model for tumor region segmentation. This work has provided key contributions to developing a high-precision classification model, which achieved 90% by VGG-16, and a robust segmentation model with 98% accuracy through UNet. These, in turn, have overcome key barriers for tumor detection and classification, and provide improved precision beyond the current methodologies. The present study is focused on developing an integrated diagnostic tool that would help identify breast cancer in an early stage and assist clinicians in making informed treatment decisions.

The objective of this research is to develop a deep learning framework which must be robust enough for the proper classification between normal, benign, and malignant classes of breast ultrasound images. The segmentation model of a special design will be employed to delineate tumor regions as accurately as possible within those images. The proposed models would then be seriously tested, using various metrics such as the models’ accuracy, sensitivity, and specificity to prove adoption viability at a clinical level. The significant contribution of this research lies in its potential to integrate seamlessly into existing clinical workflows, thereby enhancing the efficiency of diagnostic processes and improving patient care by enabling earlier and more accurate tumor detection.

The paper is organized to exhibit a methodological structure of our work presented section wise 4. The organization of this paper is as follows: Section 1 contains an introduction emphasizing importance of early detection along with objectives and overview of the proposed solution. Section 2 begins with a comprehensive literature review, identifying gaps and critically analyzing the limitations of existing studies. In section 3, the methodology is detailed, encompassing the datasets utilized, data preprocessing techniques, and how the algorithms were implemented. Section 4 presents a thorough analysis of the results obtained and compares them to the findings from prior research. Finally, section 5 outlines the conclusions drawn from the research and proposes future directions for this study.

Literature Review

The dataset for classifying benign and malignant breast tumors consisted of 780 pictures from 600 patients classified into three categories: normal, benign, and malignant. Three classifiers are compared: Support Vector Machines (SVM), k-Nearest Neighbours (KNN), and Decision Trees. The results show that the concatenation of LBP and HOG feature vectors with the SVM classifier yields the greatest classification performance, with 96% accuracy, 97% sensitivity, and 94% specificity 5. Ultrasound scans of 70,950 liver cancers and 13,990 breast tumors were performed. VGG19 with batch normalization was used as the CNN to categorize liver and breast cancers. The authors developed separate CADx (computer-aided diagnosis) to categorize liver and breast tumors using JSUM’s large-scale ultrasound picture collection, achieving 91.1% accuracy in liver tumor categorization and 85.2% in breast tumor classification 6.

Used Breast Ultrasound Image (BUSI) dataset that includes approximately 850 ultrasound images, each accompanied by a segmentation mask that manually delineates the breast region and potential tumors. The architecture applied a dual decoder technique, aided by attention mechanisms, which amplify segmentation accuracy through relevant area focus and catching contextual details 7. The Segment Anything Model (SAM) was employed to segment breast tumors in ultrasound images. The study processed three models with different prior training variations—ViT_h, ViT_l, and ViT_b—all with the aim of investigating the prompt/prompt interaction’s success related to segmentation. The dataset consists of 780 breast ultrasound images collected from 600 female patients, categorized as normal, benign, or malignant. The model did very well in being able to segment both benign and malignant tumors, but slightly better for benign ones 8. The ultrasound image is segmented into a series of homogeneous superpixels with the normalized cut (NCuts) method with texture analysis. SVM for classification was developed on 50 breast ultrasound images of benign and malignant cancers (23 benign and 27 malignant). To suppress and retain tumor borders, ultrasound images have been processed using the speckle reducing anisotropic diffusion (SRAD) approach. The suggested method has a higher FTP than the Biassed NCuts and GVF algorithms 9. Segmentation is utilized using Otsu’s thresholding to convert grayscale images into binary images, followed by morphological operations for tumor region detection. The dataset utilized comprises MRI images of breast tumors obtained from the RIDER Breast MRI dataset, consisting of 150 images for testing the proposed system. Results indicate promising accuracy rates, with reported values of 100% for one batch of images and 93.33% for another, resulting in an overall accuracy of 97.33% for the entire dataset 10.

The R-CNN model was implemented using the DDSM-BCRP and INBreast datasets, which contained 4750 and 3700 pictures, respectively. Model training was carried out on a TitanX GPU with 12 GB of on-chip memory. The model achieved an accuracy of 77% for both datasets 11. A dataset comprising 160 samples of ultrasonic breast tumor images was employed for both training and testing. The collection consists of both benign and malignant cases. The system, constructed on an Intel Core i7 processor, 16 GB of RAM, and a graphics card, was trained on an Intel Core i7 processor. Through the use of active contour segmentation, DWT for feature extraction, and statistical classification, the model achieved 99.23% accuracy in identifying benign and malignant tumors 12. Breast tumor detection algorithm applied MIAS and DDSM datasets. DDSM True Positive Fraction is 95.3 and MIAS 94.6 for model accuracy. The DDSM and MIAS have shown 95% and 93.9% precision respectively 13. The datasets of BreaKHis containing 7909 images and UCBS consisting of 58 images were used. The HP Z840 workstation and NVIDIA Quadro K5200 graphic card, which included two 64-bit Intel® Xeon® CPU E5-2650 V3 @ 2.30 GHz CPUs and 8 GB of RAM, were the hardware used. CNN model was implemented and achieved accuracy of 90% 14.

Combination of the spatially constrained adaptively regularized kernel-based fuzzy c-means (ScARKFCM) and the particle swarm optimization (PSO) clustering algorithm was implemented. The PSOScARKFCM system performed more effectively than the conventional method for detecting breast cancer with accuracy of 92.6% and precision of 91.4% 15. Two datasets consisting of 255 and 375 breast ultrasound images’ masks, with 85 and 125 images each for normal, benign, and malignant cases respectively. These images were resized to 128 x 128 and preprocessing involved training and validating ten pre-trained CNN models including InceptionResNetV2, ResNet18, SqueezeNet, ResNet101, ResNet50, Xception, InceptionV3, MobilenetV2, GoogleNet, DenseNet201 and on the dataset of 375 masks using Transfer Learning (TL). The optimum global accuracy achieved was 97.25% by the ResNet50 model 16. The dataset used in the study included 378 photos from 189 patients (204 images were benign and 174 images were malignant) and preprocessing included cropping the images to 560 * 560 pixels. Feature extraction was done using VGG-16 architecture with 32x unsampled predictions and trained model using semantic segmentation techniques to identify malignant characteristics related to BI-RADS lexicons. FCN-32s segmentation network showed highest accuracy of 92.56% and AUC 89.47% 17.

Breast Cancer Ultrasound Image (USBC) dataset with 437 benign, 210 malignant, and 133 normal pictures out of 780 images which were then augmented, resized, and cropped. Analyzed images using TL with ensemble stacking ML models like InceptionV3, VGG-16, and VGG-19 models and implemented using RBF SVM and Polynomial SVM and differently designed Multi-Layer Perceptrons (MLPs) with an AUC of 0.947 and a classification accuracy (CA) of 0.858, particularly with the Inception V3 + Stacking combination 18. Multibranch UNet-based architecture that uses autoencoding via multitask learning to achieve tumor segmentation and classification. Breast Ultrasound Images dataset was used and performed data augmentation by vertically flipping the image and rotating it by 90, 180, 270, and 360 degrees. Every image was scaled to 256 by 256 by 3. Hardware used is NVIDIA Tesla V100-32GB GPU. Average AUROC score achieved is 0.76 19. Breast tumors were classified using the MRI-US multi-modality network (MUM-Net) using 3D MR and 2D US images on a dataset with 502 patients and three clinical indicators for paired MRI-US breast tumor classification. Preprocessing included normalizing the US and DCE-MRI images to the range [0, 1], and augmenting, and resizing the images. Hardware used is NVIDIA GPU Tesla V100 32 GB. A feature fusion module enhances the compact nature of modality-agnostic characteristics, resulting in an AUC score of 0.818 20. HOG based features classified with XG boost algorithm gave tumor detection accuracy of 92% 21. Malignant skin tumors were identified with 93.6% accuracy by extracting GLCM matrix and computing Haralick texture features22.

Overall, this literature review reveals several notable research gaps in breast tumor detection and classification. These include the need for integration of multiple imaging modalities to enhance diagnostic accuracy, the necessity for larger and more diverse datasets for robust model generalization, exploration of advanced deep learning architectures for improved detection performance, efforts to enhance the interpretability of deep learning models for clinical acceptance, and the imperative for rigorous clinical validation to ensure real-world effectiveness and adoption. Several studies utilize relatively small datasets, which may limit the generalization and robustness of the developed models. There is a need for larger and more diverse datasets encompassing a wide range of breast tumor characteristics, including variations in tumor size, shape, and texture, to ensure the effectiveness of the developed algorithms across different populations and settings. Addressing these research gaps holds significant promise for advancing the field of breast tumor detection and classification, ultimately resulting in better patient outcomes. Our research uses deep learning architectures VGG-16 and U-Net and for detection and diagnosis of breast cancer.

The comparative study (Table 1) assesses the performance of different models on various datasets. In 2020, the RIDER Breast MRI dataset was used, achieving 97.33% accuracy using Otsu’s segmentation. In 2016, R-CNN models were tested on DDSM-BCRP and INBreas datasets, achieving 77% accuracy.

Table 1: Comparison of Prior Art

|

Reference |

Dataset Used |

Algorithm and Performance Evaluation |

| [9] (2020) |

RIDER Breast MRI, 1500 images |

Otsu’s Segmentation Acc. 97.33% |

| [10] (2016) |

DDSM-BCRP, 4750 images, INBreas, 3700 images |

R-CNN , Acc. 77% |

| [12] (2022) |

MIAS, 322 images, DDSM, 2620 images |

Efficient Seed Region Segmentation TPF , MIAS- 94.6%, DDSM-95.3% |

| [13] (2022) |

BreakHis, 7909 images |

CNN, Acc. 90% |

| [14] (2023) |

MIAS, 322 images |

PSO , Acc. 92.6% , Precision: 91.4% |

Also, In 2022 BreakHis dataset was utilized which got an accuracy of 90 % using CNN and another system achieved an accuracy with True Positive Fraction (TPF) of 95.3 % on DDSM and 94.6% on MIAS datasets. In 2023, 92.3% accuracy and 91.3% precision were attained on MIAS dataset.

Materials and Methods

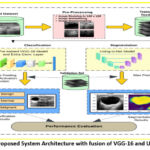

In the pursuit of robust and reliable tumor detection, the methodology employed in this research paper is structured to effectively process and evaluate images with the ultimate goal of distinguishing among benign, malignant and normal. The algorithm is designed to take ultrasound breast images as input and find out whether it is benign, malignant and normal, consisting of a series of carefully orchestrated steps and predict the mask of the image to understand the area of tumor present as shown in figure 1.

|

Figure 1: Block Diagram of Breast Tumor classification and Segmentation

|

Dataset Description

The dataset used in this study is the Breast Ultrasound Image (BUSI) dataset, organized in 2018. Breast ultrasound images among women in ages from 25 to 75 years old are present in this dataset. Collected in 2018, the number of female patients is 600. It has 780 images with an average image size of 500*500 pixels. PNG format is used. The ground truth images and original images, both are provided. There are 3 classes namely Normal (figure 2), Benign (figure 3) and Malignant (Figure 4). Table 2 presents a summary of the data split-up details. A total of 70% of the data consisting of 582 images is used for training data, with 15% (124 images) for testing data and 15% (124 images) validation data ensuring proper distribution23.

|

Figure 2: Normal Breast Ultrasound Images

|

|

Figure 3: Benign Breast Tumor Ultrasound Images

|

|

Figure 4: Malignant Breast Tumor Ultrasound Images

|

Table 2: Data Split-Up

|

Database |

Class |

Training |

Validation |

Testing |

Total |

|

BUSI Dataset |

Normal |

93 |

20 |

20 |

133 |

|

|

Benign |

341 |

73 |

73 |

487 |

|

|

Malignant |

148 |

31 |

31 |

210 |

|

Total |

|

582 |

124 |

124 |

780 |

Pre-processing

Resized the BUSI dataset images of all 3 classes (normal, benign and malignant) into 128×128 resolution.

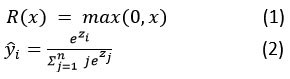

After resizing the images CNN model was applied on the images. In the case of a classification model, pretrained VGG-16 model as illustrated in figure 5, is implemented to classify images as benign, malignant and normal by applying filters of size 3 from 256×256 to 16×16 resolution. An additional convolution and fully connected layer are applied to the output layer of the pre-trained VGG-16 model to improve the accuracy. Rectified Linear Activation Function (relu) is used as given in equation (1) along with neuron dropout by a factor of 0.1 to avoid overfitting. Max-pooling followed by converting feature matrix into dense layer is applied along with softmax function (2) and uses Adam optimizer while compiling the final model as given in algorithm 1.

Algorithm 1: Classification using VGG-16

Input: Breast ultrasound images (PNG format)

Dataset split into training (70%), testing(15%), validation(15%).

for image in folder:

Normalize and resize the image.

Apply VGG16 with filter size 3.

Applying 2D CNN to output of VGG16.

Activation function using R(z) = max(0,z).

Average Pooling on 2D CNN output feature

Flat 2D output matrix to 1D

end for

Output: Classified as normal, benign, or malignant

|

Figure 5: Proposed System Architecture with fusion of VGG-16 and UNet models

|

Segmentation model using UNet as given in algorithm 2 is designed to mask the tumor area present in the images. The UNet model is implemented with the use of a custom designed 2D Convolution block. The custom block contains 2 convolutions where each of these convolutions contains the same parameters, kernel size of 3, number of filters, with Batch normalization and ‘relu’ activation function. For the UNet model, in the encoder part that is up sampling of image, filters are applied from 16 to 256 and each layer’s output is then passed to decoder part for downsampling the image along with neuron dropout by a factor of 0.07.

Algorithm 2: Segmentation using UNet

Input: Breast ultrasound images (PNG format)

Defining custom 2D convolution block

for image in folder:

Normalize and resize the image.

Down sample image using custom Conv2D block (Encoder).

Max pooling and Neuron dropout by a factor of 0.1 at each convolution

Up sample image by transposing every encoded layer (Decoder).

Apply Conv2D to the last decoded layer with Sigmoid activation function.

end for

Output: Mask image of tumor

The mask produced by the proposed segmentation model matched the mask images in the ground truth dataset with accuracy of 98%.

Results and Discussion

The results of our research highlight how successful the suggested methodology is at identifying and categorizing breast tumors. Utilizing the VGG-16 convolutional neural network (CNN) for classification tasks, achieved a classification accuracy of 80% on the Breast Ultrasound Image (BUSI) dataset. This indicates the model’s ability to accurately differentiate between benign, malignant, and normal breast tissues, showcasing its potential for aiding clinicians in diagnosing breast cancer.

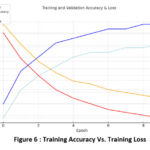

|

Figure 6 : Training Accuracy Vs. Training Loss

|

Ten epochs are used to train the model, during this model training accuracy has been increasing and the training accuracy has been decreasing as shown in figure 6.

|

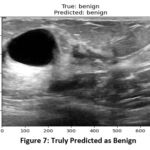

Figure 7: Truly Predicted as Benign

|

|

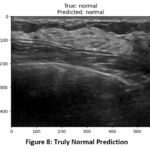

Figure 8: Truly Normal Prediction

|

When images from a valid dataset are passed to the model for classification, the model was able to predict the correct type of tumor from the images. In figure 7, the actual class of image is benign and the model was able to correctly predict the class as benign and similarly in (figure 8), the model predicted normal for the image of normal class.

In figure 9, the actual malignant image was correctly identified and predicted as malignant by the model. This indicates the robustness and accuracy of the model in distinguishing between benign and malignant tumors when presented with images from a valid dataset. Table 3. shows the confusion matrix of the classifier model during test.

|

Figure 9: Truly Predicted as Malignant

|

Table 3: Confusion Matrix for testing performance

|

|

Normal |

Benign |

Malignant |

Total |

|

Normal |

18 |

1 |

1 |

20 |

|

Benign |

2 |

66 |

5 |

73 |

|

Malignant |

0 |

3 |

28 |

31 |

|

Total |

20 |

70 |

34 |

124 |

The UNet segmentation model performs well in precisely identifying tumor locations in ultrasound pictures. The tumor masks are projected with tight alignment with the ground truth masks. This indicates a better ability of the model to detect tumor borders.

|

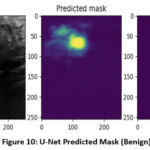

Figure 10: U-Net Predicted Mask (Benign)

|

As shown in figure 10, the predicted mask given for the benign image is similar to the actual mask of this dataset.

|

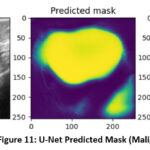

Figure 11: U-Net Predicted Mask (Malignant)

|

Likewise, as shown in figure 11, the mask that was predicted by the UNet segmentation model for the malignant image fits well with the actual mask provided in the dataset. Such an alignment between predicted and actual masks shows the efficacy of the UNet model to accurately segment both malignant and benign regions in the images. The VGG16 and UNet fusion model forms a strong architecture for tumor detection, classification, and segmentation. A high performance for tumor segmentation was achieved.

Features extracted by using LBP and HOG and classified with the SVM classifier in literature yielded 96% accuracy. The Haralick features used in literature discriminate the texture of lesions. However, it does not segment the tumor. Segmentation of tumor using level set method is carried out in literature. When the radius of opening was 8, the highest sensitivity of 72.7% was detected 24. A bigger radius caused misjudgment, resulting in to further reduction in performance up to 50% sensitivity. Segmentation of the colonoscopy images using CRPU-Net achieved the segmentation accuracy of 96.42% with improved generalization using k-fold cross validation in 25

In the presented work a 2-step process is implemented: initial classification followed by detailed segmentation for images identified as either benign or malignant. The dual functionality is an advancement over single-function models cited in the literature, which generally show lower accuracy in comprehensive diagnostic settings. Specifically, the fusion model outperforms state-of-the-art models like those employing only VGG-19, which have been noted to achieve lower accuracy rates in mixed diagnostic environments.

The results rely on the BUSI dataset, which is demographic and contains only 780 images; therefore, it may be insufficient for training deep learning models. Additionally, complex tumor shapes and boundaries may pose challenges for the segmentation model.

Conclusion and Future Scope

This research proposes an approach for accurate and efficient detection of breast tumors based on deep learning techniques of classification, employing specifically the VGG-16 convolutional neural network (CNN) for classification and the UNet model for segmentation tasks. With the employment of the Breast Ultrasound Image (BUSI) dataset, our approach provides reasonable promise in accurately identifying and segmenting breast tumors out of ultrasound images. In addition to that, the UNet segmentation model does exceptionally well in the accurate delineation of tumor regions, with predictions being closely aligned with the actual tumor masks. The accuracy for classification is found to be 90% whereas, for segmentation, it is found to be 98%. This highlights the potential of deep-learning-based segmentation algorithms in improving tumor localization and support treatment planning as compared to previous techniques.

Future directions to this work will consist of several means by which deep learning methods will be applied towards the detection and segmentation of breast tumors. Work could be extended to investigate novel manual-fusion methods, explore transfer learning methods with other state-of-the-art architectures, and pass the performance of data augmentation techniques. Clinical validation studies with real-time deployments and consultations with medical professionals could greatly aid in producing this work into practical clinical applications. Other important issues that need to be resolved for better effectiveness of the model are class-imbalance problems that arose within the proposed and to develop interactive learning methods to allow continuous learning and user feedback.

Acknowledgement

Authors would like to thank the management of Vishwakarma Institute of technology, Pune for support and motivation.

Funding Sources

The author(s) received no financial support for the research, authorship, and/or publication of this article.

Conflict of Interest

The author(s) do not have any conflict of interest.

Data Availability Statement

This statement does not apply to this article.

Ethics Statement

This research did not involve human participants, animal subjects, or any material that requires ethical approval.

Informed Consent Statement

This study did not involve human participants, and therefore, informed consent was not required.

Clinical Trial Registration

This research does not involve any clinical trials

Author Contributions

- Swati Shilaskar : Project Administration, Analysis

- Shripad Bhatlawande : Conceptualization, Resources

- Mayur Talewar : Data aggregation, Masking, Original Draft

- Sidhesh Goud : Coding, Visualization, Original Draft

- Soham Tak : Segmentation analysis, Review and editing

- Sachi Kurian : Analysis, Writing – Review & Editing

- Anjali Solanke : Methodology, Supervision , Review

References

- Acharjya K, Manikandan M. Accurate breast tumor identification using cutting-edge deep learning. In: 2023 International Conference on Recent Advances in Science and Engineering Technology (ICRASET). IEEE; 2023:1-5. doi:10.1109/ICRASET59632.2023.10420410.

CrossRef - Daoud MI, Al-Ali A, Ali MZ, Hababeh I, Alazrai R. Detecting the regions-of-interest that enclose the tumors in breast ultrasound images using the RetinaNet model. In: 2023 10th International Conference on Electrical and Electronics Engineering (ICEEE). IEEE; 2023:36-40. doi:10.1109/ICEEE59925.2023.00014.

CrossRef - Xu M, Huang J, Huang K, Liu F. Incorporating tumor edge information for fine-grained BI-RADS classification of breast ultrasound images. IEEE Access. 2024. doi:10.1109/ACCESS.2024.3374380.

CrossRef - Selvaraj, J., & Jayanthy, A. K. . Design and development of artificial intelligence‐based application programming interface for early detection and diagnosis of colorectal cancer from wireless capsule endoscopy images. International Journal of Imaging Systems and Technology, 34(2), e23034, 2024. https://doi.org/10.1002/ima.23034

CrossRef - Benaouali M, Bentoumi M, Touati M, Ahmed AT, Mimi M. Segmentation and classification of benign and malignant breast tumors via texture characterization from ultrasound images. In: 2022 7th International Conference on Image and Signal Processing and their Applications (ISPA). IEEE; 2022:1-4. doi:10.1109/ISPA54004.2022.9786350.

CrossRef - Yamakawa M, Shiina T, Tsugawa K, Nishida N, Kudo M. Deep-learning framework based on a large ultrasound image database to realize computer-aided diagnosis for liver and breast tumors. In: 2021 IEEE International Ultrasonics Symposium (IUS). IEEE; 2021:1-4. doi:10.1109/IUS52206.2021.9593518.

CrossRef - Hekal AA, Elnakib A, Moustafa HED, Amer HM. Breast cancer segmentation from ultrasound images using deep dual-decoder technology with attention network. IEEE Access. 2024. doi:10.1109/ACCESS.2024.3351564.

CrossRef - Hu M, Li Y, Yang X. Breastsam: a study of segment anything model for breast tumor detection in ultrasound images. arXiv preprint arXiv:2305.12447. 2023. doi:10.48550/arXiv.2305.12447.

CrossRef - Daoud MI, Atallah AA, Awwad F, Al-Najar M. Accurate and fully automatic segmentation of breast ultrasound images by combining image boundary and region information. In: 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI). IEEE; 2016:718-721. doi:10.1109/ISBI.2016.7493367.

CrossRef - Rahman M, Hussain MG, Hasan MR, Sultana B, Akter S. Detection and segmentation of breast tumor from MRI images using image processing techniques. In: 2020 Fourth International Conference on Computing Methodologies and Communication (ICCMC). IEEE; 2020:720-724. doi:10.1109/ICCMC48092.2020.ICCMC-000134.

CrossRef - Akselrod-Ballin, Ayelet, Leonid Karlinsky, Sharon Alpert, Sharbell Hasoul, Rami Ben-Ari, and Ella Barkan. “A region based convolutional network for tumor detection and classification in breast mammography.” In Deep Learning and Data Labeling for Medical Applications: First International Workshop, LABELS 2016, and Second International Workshop, DLMIA 2016, Held in Conjunction with MICCAI 2016, Athens, Greece, October 21, 2016, Proceedings 2, pp. 197-205. Springer International Publishing, 2016. doi:10.1007/978-3-319-46976-8_21.

CrossRef - Mahmoud, A. A., El-Shafai, W., Taha, T. E., El-Rabaie, E. S. M., Zahran, O., El-Fishawy, A. S., & Abd El-Samie, F. E. A statistical framework for breast tumor classification from ultrasonic images. Multimedia Tools and Applications, 80, 5977-5996, 2021. doi:10.1007/s11042-020-08693-0.

CrossRef - Shrivastava N, Bharti J. Breast tumor detection in digital mammogram based on efficient seed region growing segmentation. IETE J Res. 2022;68(4):2463-2475. doi:10.1080/03772063.2019.1710583.

CrossRef - Saxena S, Shukla PK, Ukalkar Y. A shallow convolutional neural network model for breast cancer histopathology image classification. In: Proceedings of International Conference on Recent Trends in Computing: ICRTC 2022. Singapore: Springer Nature Singapore; 2023: 593-602. doi:10.1007/978-981-19-8825-7_51.

CrossRef - Balaji S, Arunprasath T, Rajasekaran MP, Sindhuja K, Kottaimalai R. A metaheuristic based clustering approach for breast cancer identification for earlier diagnosis. In: 2023 4th International Conference on Smart Electronics and Communication (ICOSEC). IEEE; 2023:1-7. doi:10.1109/ICOSEC58147.2023.10275824.

CrossRef - GadAllah, Mohammed Tarek, Abd El-Naser A. Mohamed, Alaa A. Hefnawy, Hassan E. Zidan, Ghada M. El-Banby, and Samir Mohamed Badawy. “Convolutional Neural Networks Based Classification of Segmented Breast Ultrasound Images–A Comparative Preliminary Study.” In 2023 Intelligent Methods, Systems, and Applications (IMSA), 585-590. IEEE, 2023. doi:10.1109/IMSA58542.2023.10217585.

CrossRef - Ahila SS, Geetha M, Ramesh S, Senthilkumar C, Nirmala P. Identification of malignant attributes in breast ultrasound using a fully convolutional deep learning network and semantic segmentation. In: 2022 3rd International Conference on Smart Electronics and Communication (ICOSEC). IEEE; 2022:1226-1232. doi:10.1109/ICOSEC54921.2022.9952130.

CrossRef - Rao, Kuncham Sreenivasa, Panduranga Vital Terlapu, D. Jayaram, K. Kishore Raju, G. Kiran Kumar, Rambabu Pemula, Venu Gopalachari, and S. Rakesh. “Intelligent ultrasound imaging for enhanced breast cancer diagnosis: Ensemble transfer learning strategies.” IEEE Access ,2024. doi:10.1109/ACCESS.2024.3358448.

CrossRef - Adityan MKL, Sharma H, Paul A. Segmentation and classification-based diagnosis of tumors from breast ultrasound images using multibranch Unet. In: 2023 IEEE International Conference on Image Processing (ICIP). IEEE; 2023:2505-2509. doi:10.1109/ICIP49359.2023.10222147.

CrossRef - Qiao, Mengyun, Chencheng Liu, Zeju Li, Jin Zhou, Qin Xiao, Shichong Zhou, Cai Chang, Yajia Gu, Yi Guo, and Yuanyuan Wang. “Breast tumor classification based on MRI-US images by disentangling modality features.” IEEE Journal of Biomedical and Health Informatics 26, no. 7 , 2022: 3059-3067. doi:10.1109/JBHI.2022.3140236.

CrossRef - S. Shilaskar, T. Mahajan, S. Bhatlawande, S. Chaudhari, R. Mahajan and K. Junnare, “Machine Learning based Brain Tumor Detection and Classification using HOG Feature Descriptor,” 2023 International Conference on Sustainable Computing and Smart Systems (ICSCSS), Coimbatore, India, 2023, 67-75, doi: 10.1109/ICSCSS57650.2023.10169700.

CrossRef - J. Madake, A. Shembade, K. Shetty, S. Bhatlawande and S. Shilaskar, “Vision-Based Skin Lesion Characterization Using GLCM and Haralick Features,” 2022 IEEE Conference on Interdisciplinary Approaches in Technology and Management for Social Innovation (IATMSI), Gwalior, India, 2022, 1-6, doi: 10.1109/IATMSI56455.2022.10119457.

CrossRef - Selvaraj, Jothiraj, Snekhalatha Umapathy, and Nanda Amarnath Rajesh. “Artificial intelligence based real time colorectal cancer screening study: Polyp segmentation and classification using multi-house database.” Biomedical Signal Processing and Control99 2025 : 106928. https://doi.org/10.1016/j.bspc.2024.106928

CrossRef - David, D. Stalin. “Parasagittal meningioma brain tumor classification system based on MRI images and multi phase level set formulation.” Biomedical and Pharmacology Journal 12, no. 2 , 2019 : 939-946. https://dx.doi.org/10.13005/bpj/1720

CrossRef - Selvaraj, Jothiraj, and Snekhalatha Umapathy. “CRPU-NET: a deep learning model based semantic segmentation for the detection of colorectal polyp in lower gastrointestinal tract.” Biomedical Physics & Engineering Express10, no. 1 2023: 015018. https://doi.org/10.1088/2057-1976/ad160f

CrossRef