Kamaldeep Kaur , Sumit Budhiraja*

, Sumit Budhiraja* and Neeraj Sharma

and Neeraj Sharma

ECE, UIET, Panjab University, Chandigarh, India-160014

Corresponding Author E-mail: sumit@pu.ac.in

DOI : https://dx.doi.org/10.13005/bpj/1844

Abstract

Image fusion combines complimentary information from multiple images acquired through different sensors, into a single image. In this paper, an image fusion technique based on Gray wolf optimization is proposed for fusion of medical images, Magnetic Resonance Image (MRI) and Positron Emission Tomography (PET). Firstly, Hilbert transformation is applied to extort the informative data from input images. The relevant part of the input images based on information present, is selected for image fusion. The selected portion of the input images is fused by using Gray wolf optimization based method. The simulation results show improved performance of proposed framework as compared to conventional techniques.

Keywords

Gray Wolf Optimization; Hilbert Transform; IHS; Multimodal Image Fusion

Download this article as:| Copy the following to cite this article: Kaur K, Budhiraja S, Sharma N. Multimodal Medical Image Fusion based on Gray Wolf Optimization and Hilbert Transform. Biomed Pharmacol J 2019;12(4). |

| Copy the following to cite this URL: Kaur K, Budhiraja S, Sharma N. Multimodal Medical Image Fusion based on Gray Wolf Optimization and Hilbert Transform. Biomed Pharmacol J 2019;12(4). Available from: https://bit.ly/363TxuX |

Introduction

Due to advancement in sensor technology, a lot of images acquired through different modalities have become readily available and multimodal medical image fusion has observed a lot of research in recent years [1-3]. The fusion of images attained from several imaging mechanisms such as Computed Tomography, MRI, and PET play a major role in medical diagnosis and other clinical applications [4-6]. A diverse level of information is obtained from every imaging mechanism. For example, generally the CT is utilized for visualizing dense structures on the basis of X-ray principle, which is not appropriate for soft tissues and physiological study. In contrast, the MRI offers improved visualization of soft tissues which is basically utilized for detection of tumors and additional tissue abnormalities. The PET is also a nuclear imaging method and offers the knowledge of blood flow in the body, but it suffers from low resolution comparative to the CT and MRI. Therefore the image fusion used on images from diverse modalities is advantageous for clinical diagnosis and treatment [7-8].

A fused image is generated by integrating the information from multimodality images. Image fusion techniques can be usually grouped into pixel, feature, and decision level fusion. For medical imaging, the pixel level techniques are more appropriate, as they maintain better spatial details in fused images as compared to the feature and decision level techniques.

The traditional pixel level mechanisms involving addition, subtraction, multiplication, and weighted average are easy and minimally accurate. IHS based techniques are also popular as they generate high resolution fused images, but may cause spectral distortion due to inaccurate evaluation of spectral information [9-12]. Likewise, through replacing certain principle components, the images are fused by principal components analysis based mechanisms.

Related Work

In last few years, a lot of research has taken place in the area of medical image fusion. Hajer Ouerghi et al., illustrated that in various oncology applications the current utilized hybrid modality was the fusion of Magnetic resonance imaging and positron emission tomography image [13]. The author had proposed an effective MRI–PET imaging fusion method depend upon non-sub sampled shearlet transform (NSST) and simplified pulse-coupled neural network (S-PCNN) manner.

Bhavana et al proposed an image fusion mechanism that performed wavelet decomposition for PET as well as MRI images using diverse activity levels [14]. In the gray matter area, as well as white matter area, the improved color preservation was obtained through fluctuating structural information and spectral information. Huang et al. presented a PET and MRI brain image fusion mechanism on the basis of wavelet transform for low- and high-activity brain image regions [15]. Through adjusting the anatomical structural information in the gray matter (GM) regions, the proposed mechanism produced better fusion outcomes. In this work, the author utilized normal coronal, normal axial and Alzheimer’s disease brain images as datasets for study. The proposed work offered improved results as compared to existing techniques. P. W. Huang et al proposed an effective MRI–PET image fusion method based on non-subsampled shearlet transform (NSST) and simplified pulse-coupled neural network model (S-PCNN). Initially, the PET image was changed to YIQ independent components. Afterward, the source registered MRI image and the Y-component of PET image were decomposed into low-frequency (LF) and high-frequency (HF) subbands by utilizing NSST. The inverse NSST and inverse YIQ were utilized in last step to obtain the fused image. The simulation results showed that proposed mechanism had an improved performance comparative to the other similar mechanisms. Haribabu et al. proposed a paradigm that was computationally easy and can be executed in real time applications [16]. Daneshvar et al introduced a novel application of the human vision system in multispectral medical image fusion [17]. The simulation results demonstrated preservation of more spectral features with less spatial distortion. Mehdi et al. offered a novel mechanism on the basis of bi-dimensional empirical mode decomposition (BEMD) [18]. This mechanism was utilized to decompose the MRI and also the intensity component of PET image after decomposing it along with the IHS mechanism. Afterward, the meaningful information was collected from both the images and the irrelevant information was discarded. The simulation results demonstrated improved results as compared to existing techniques. H Fayad et al produced 4D MR images and related attenuation maps from a single static MR image and motion fields attained from concurrently obtained 4D non-attenuation corrected (NAC) PET images [19]. The accuracy of the projected mechanism was calculated by comparing the output images with the real time images. To deal with the cases of noise in the images, the denoising algorithms have also been used by researchers [20].

Traditionally multiscale methods have been very popular for image fusion, as they are simple, and represent image information efficiently. A lot of methods based on various multiscale transforms have been proposed for fusion of medical images [21-23]. Gauri et al presented a review of hybrid approaches for fusion of PET and MRI images [24]. Nobariyan et al presented an image fusion framework using neural networks [25].

As the PET images contain non-informative part also, the content of the fused image also gets affected by the irrelevant part of the PET images after fusion with the MRI images. To minimize these issues in the traditional methods, many solutions have been proposed by researchers in recent years.

Proposed Framework

In this paper, an image fusion technique based on Gray wolf optimization and Hilbert transform has been proposed. In the proposed method, selection of the portion of image for image fusion is done on the basis of the intensity, so that only informative part can be used for the purpose of fusion. Additionally, in order to fuse the PET and MRI images, Gray Wolf Optimization is employed for fusion [26]. The proposed technique can be described in following steps:

Firstly, hilbert transformation is applied on the MRI image whereas the process for the PET images is different. For the signal processing the 2-D HT method is utilized. For the spatial domain, the 2DHT formulation is illustrated as below:

![]()

The function for frequency domain is as follows:

![]()

From (1), the 2DHT is evaluated as:

![]()

Therefore, first of all the PET images are converted from RGB to IHS format after which the Hilbert Transformation is applied on the Intensity of the image instead on Hue or Saturation. For image sharpening the Intensity, Hue and Saturation transformation is a broadly utilized method. It can be concluded from the visuals that the fluctuations of the intensity has small effects on the spectral section that can be controlled.

After applying the Hilbert Transformation on MRI and PET images, a couple of images are acquired that are HT1 image and HT2 After getting these images the GWO (Gray wolf optimization) based image fusion technique is applied in order to fuse the images for the process of image fusion. The optimum spectrum scaling is used, which is comparative to the conventional scaling. The GWO generates swarm intelligence on the basis of the hunting method of GWO calculation. GWO contains the following steps:

Initialization of gray wolf positions

Fitness function

Social hierarchy of gray wolf family

Encircling prey

Hunting

Attacking prey

Search for prey

Mutual Information (MI) is used as the fitness function which is a quantitative measure of the multimodal fusion. MI gives the amount of information preserved in our fused image as it is a maximization function. It is calculated as:

![]()

In Eq. (4), P(x,y) is the probability distribution function whereas P(x) and P(y) represents the marginal probability functions of both modalities respectively.

The next step is to evaluate the fitness. After evaluating the fitness, the best threshold is achieved for the image fusion process. The two threshold values attained from MRI and PET images are added to obtain final threshold value.

If true, the values are best or appropriate for the fusion then by utilizing the wavelet the images are fused. If No, then again next iteration is prepared by performing the 4th and 5th step again.

After fusing the images the Inverse Hilbert Transformation is applied on the fused images in order to obtain the improved I (Intensity) combined Hue/Saturation.

After obtaining the improved I (Intensity) combined Hue/Saturation the final fused image is attained. After which the performance evaluation is accomplished in terms of several performance metrics like Discrepancy, Average Gradient Value and Overall performance of the mechanism.

The Particle Swarm Optimization (PSO) and Differential Evolutionary (DE) based optimizations techniques are used to compare our proposed optimization technique [27]. These techniques have been mostly inspired by very simple concepts typically related to physical phenomena, animal’s behaviour or evolutionary concepts. PSO is a part of soft computing which is used to optimize a problem by iteratively trying to improve a candidate solution with regard to a given measure of quality.

|

Figure 1: Framework of proposed work

|

A basic variant of the PSO algorithm works by having a population (called a swarm) of candidate solutions (called particles). The genetic paradigm and the pattern research are related to the differential evolution. In this no global finest resolution for its research expression as opponent to the particle group optimization but it acquires the mutations and crossings.

Results and Analysis

The simulation results are obtained by applying Hilbert Transformation on the MRI and PET images as well as by applying the GWO based image fusion technique for the image fusion process. The size of source images i.e. MRI and PET are 500 x 500 and 499 x496 respectively. The source images are taken from http://www.med.harvard.edu/aanlib/cases/caseNA/pb9.htm and http://www.med.harvard.edu/aanlib/. PET image is converted into IHS image and the MRI image is converted into gray scale image of standard size i.e. 256×256. Finally the fused image is attained by combining the Grayscale MRI image and the IHS PET image through utilizing GWO based image fusion technique. The proposed method is implemented in MATLAB R2015a.

For performance evaluation of proposed work, Discrepancy (D), Average Gradient (AG) and Overall Performance (OP) are used [28, 29]. Population size, and number of iterations are varied to observe their effects on the performance of proposed algorithm. Lower limit of threshold is 0.5, and the upper limit of threshold is 1.

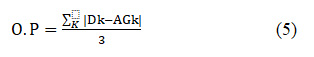

Overall Performance (O.P) is calculated by the difference between the first two quantitative evaluation metrics and taken as a final result. Higher overall fusion quality is achieved by having small amount of overall performance.

where K=R(Red), G(Green), B(Blue).

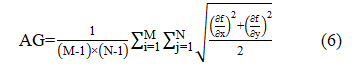

Average Gradient (AG) shows the preservation of spatial quality of input images in the fused image. Larger value of average gradient gives the higher spatial resolution. It can be calculated as:

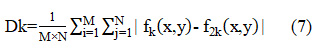

Discrepancy (Dk) shows the preservation of spectral features of input images in the fused image. Lower value of discrepancy shows the higher spectral resolution. It can be calculated as:

where are the pixel values of fused image at position (x,y) and M×N is the size of both the input and fused images as 256×256.

|

Figure 2: (a) MRI Brain Image (b) PET Brain Image (c) IHS-PET Image (d) IHS New Image (e) HT-IHS Fused Image (f) HT-IHS using GWO Fused Image

|

The input source images along with the final fused image obtained by GWO based fusion method are shown in Fig 2. The RGB to IHS conversion of PET image and IHS image with new intensity value are shown in Fig 2(c) and Fig 2(d). Fig 2(f) shows the fused image of traditional 2-D HT and IHS method.

The proposed algorithm is implemented with three different optimization techniques Particle Swarm Optimization, Differential Evolution, and Gray Wolf Optimization. The results are analyzed by changing the number of iterations and population size. In terms of optimization technique, population means generation of some random values called candidate solutions on which the output depends. Over the course of iterations, each candidate solution updates its values for better result. The performance is evaluated using discrepancy, average gradient, and overall performance parameters, with respect to the iteration and population variations.

Table 1: Discrepancy for optimization algorithms in proposed model wrt iterations

| Discrepancy | |||

| Iterations | PSO | DE | GWO |

| 10 | 9.32 | 9.16 | 9.31 |

| 20 | 9.50 | 9.23 | 9.31 |

| 30 | 9.52 | 9.32 | 9.32 |

| 40 | 10.1 | 9.54 | 9.39 |

| 50 | 10.1 | 9.73 | 10.1 |

Table 1 shows the discrepancy of fused image for PSO, DE and GWO in proposed algorithm. Among the three optimization techniques, DE achieves the highest spectral resolution with the lowest value of discrepancy. There are small variations in discrepancy with number of iterations, and the small value of iteration give better value of discrepancy.

Table 2: Average Gradient for optimization algorithms in proposed model wrt iterations

| Average Gradient | |||

| Iterations | PSO | DE | GWO |

| 10 | 1.12 | 1.12 | 5.92 |

| 20 | 1.12 | 1.12 | 5.92 |

| 30 | 1.12 | 1.12 | 5.92 |

| 40 | 1.12 | 1.12 | 5.92 |

| 50 | 1.12 | 1.12 | 5.92 |

Table 2 shows the average gradient for PSO, DE and GWO with respect to the number of iterations. The average gradient is a parameter that is use to calculate the ability of final combined image in terms of spatial quality or clarity. The table shows that the average gradient of all techniques remains constant with variation in number of iterations, and GWO optimization achieves very large value of average gradient as compared to DE and PSO.

Table 3: Overall Performance for optimization algorithms in proposed model wrt iterations

| Overall Performance | |||

| Iterations | PSO | DE | GWO |

| 10 | 6.65 | 6.95 | 2.09 |

| 20 | 6.66 | 7.01 | 2.16 |

| 30 | 6.66 | 7.06 | 2.24 |

| 40 | 6.66 | 7.08 | 2.26 |

| 50 | 6.68 | 7.09 | 2.28 |

The overall performance of the PSO, DE and GWO in terms of different iterations is shown in table 3. The overall performance is evaluated by differentiating between and . The overall performance of the GWO is found to be higher than other optimization techniques.

Table 4: Discrepancy for optimization algorithms in proposed model wrt population size

| Discrepancy | |||

| Population Size | PSO | DE | GWO |

| 10 | 10.1 | 9.29 | 9.26 |

| 20 | 10.1 | 9.30 | 9.31 |

| 30 | 10.1 | 9.30 | 10.1 |

| 40 | 10.1 | 9.33 | 10.1 |

| 50 | 10.1 | 10.04 | 10.1 |

Table 4 shows the discrepancy obtained using GWO, DE and PSO with respect to population size. It is observed that GWO again has the highest spectral resolution with the lowest value of discrepancy with a population size of 10.

Table 5: Average Gradient for optimization algorithms in proposed model wrt population size

| Average Gradient | |||

| Population Size | PSO | DE | GWO |

| 10 | 1.12 | 1.12 | 5.92 |

| 20 | 1.12 | 1.12 | 5.92 |

| 30 | 1.12 | 1.12 | 5.92 |

| 40 | 1.12 | 1.12 | 5.92 |

| 50 | 1.12 | 1.12 | 5.92 |

Table 5 shows the average gradient for GWO, PSO and DE with respect to the population size. The average gradient is a parameter that is use to compute the ability of final combined image in terms of spectral quality or clarity. The table shows that the average gradient of GWO optimization is quite higher in comparison to the DE and PSO. The value of average gradient also remains constant with variation in population size.

Table 6: Overall Performance for optimization algorithms in proposed model wrt population size

| Overall Performance | |||

| Population Size | PSO | DE | GWO |

| 10 | 6.66 | 6.91 | 1.86 |

| 20 | 6.66 | 6.93 | 1.86 |

| 30 | 6.79 | 7.04 | 2.15 |

| 40 | 6.89 | 7.06 | 2.30 |

| 50 | 7.07 | 7.09 | 2.31 |

Table 5 shows the overall performance of the GWO, PSO and DE in the terms of different population size. GWO provide the effective value (i.e. low values) of overall performance parameter for various population size as compared to the other optimization methods.

The results show that GWO maintains better performance with respect to variations in iterations, and population size and performs better than the DE and PSO. For some values of input parameters, DE, and PSO give better values of output parameters, but overall, GWO gives far better results than DE and PSO.

Table 7: Performance of Proposed Method

| Techniques | Discrepancy Value | Average Gradient | Overall Performance |

| HIS | 14.8 | 5.17 | 9.61 |

| DHT and HIS | 12.8 | 5.23 | 7.57 |

| Gradient pyramid | 15.9 | 4.66 | 11.2 |

| FSD Pyramid | 16.1 | 4.73 | 11.4 |

| 2DHT | 20.3 | 4.89 | 15.2 |

| Haar Wavelet | 13.3 | 5.19 | 8.31 |

| Proposed Work | 10.1 | 5.92 | 5.86 |

After selecting GWO as optimization technique proposed algorithm is evaluated with three performance parameters. The results obtained using the proposed technique have been compared with IHS method, 2-D hilbert transform, combination of IHS and 2-D Hilbert Transform, gradient pyramid technique, FSD pyramid technique, and haar wavelet method as shown in Table 7.

The results show the smallest value of overall performance obtained by proposed method. It means higher overall fusion quality achieved by proposed GWO based method. Similarly, the lower value of discrepancy and the larger value of average Gradient given by the proposed method show the higher spectral resolution and the higher spatial resolution of fused image. So, it can be concluded that proposed method offers better discrepancy, average gradient and overall performance as compared to traditional methods. In future, the performance of the GWO can be further improved by using initial generated population using the chaotic map.

Conclusion

In this paper, an image fusion technique based on gray wolf optimization and hilbert transform has been presented. In the proposed method, the portion of image having significant information is selected for image fusion. Then, GWO based image fusion technique is applied for fusion of MRI images and PET images. In this way, only informative parts of both the images are fused. The detailed analysis of the proposed method has been done by varying the number of iteration and population size. The subjective and objective evaluations show that proposed method offers better performance than traditional techniques.

Acknowledgements

Authors would like to thank faculty members of ECE, UIET for their help and support regarding this research work.

Conflict of Interest

There is No Conflict of Interest, as also shown in attached Copyright Form.

Funding Source

No funding has been received from anywhere, for carrying out this research work.

References

- P. James and B. V.Dasarathy, “Medical image fusion: a survey of the state of the art,” Information Fusion, vol. 19, no. 1, pp. 4–19, 2014.

- Shutao Li, Xudong Kang, Leyuan Fang, Jianwen Hu, Haitao Yin, “Pixel-level image fusion: A survey of the state of the art”, Information Fusion, Vol 33, 100–112, 2017

- Du, et al., “An overview of multi-modal medical image fusion”, Neurocomputing Vol 15, 3–20, 2016

- Maruturi Haribabu, Ch. Hima Bindu, K. Satya Prasad, “Multimodal Medical Image Fusion of MRI-PET Using Wavelet Transform”, ICAMNCIA, Pp 127-130, 2012.

- Mehdi Sefidgar Dilmaghani, Sabalan Daneshvar, Mehdy Dousty, “A new MRI and PET Image Fusion algorithm based on BEMD and IHS methods”, ICEE, Pp 118-121, 2017.

- W. Townsend, T. Beyer, and T. M. Blodgett, “PET/CT scanners: A hardware approach to image fusion”, Seminars Nucl. Med., vol. 33, No. 3, pp. 193-204, 2003

- S. Judenhofer et al., “Simultaneous PET-MRI: A new approach for functional and morphological imaging,” Nature Med., vol. 14, no. 4, pp. 459-465, 2008

- Han, T. S. Hatsukami, and C. Yuan, “A multi-scale method for automatic correction of intensity non-uniformity in MR images,” J. Magn. Reson. Imag., vol. 13, no. 3, pp. 428-436, 2001

- Chen, R. Zhang, H. Su, J. Tian, and J. Xia, “SAR and multispectral image fusion using generalized IHS transform based on à Trous wavelet and EMD decompositions”, IEEE Sensors J., vol. 10, no. 3, pp. 737-745, Mar. 2010

- Choi, “A new intensity-hue-saturation fusion approach to image fusion with a tradeoff parameter,” IEEE Trans. Geosci. Remote Sens., vol. 44, no. 6, pp. 1672-1682, Jun. 2006.

- -M. Tu, S. C. Su, H. C. Shyu, and P. S. Huang, “A new look at IHS like image fusion methods,” Inf. Fusion, vol. 2, no. 3, pp. 177-186, 2001.

- Yang, W. Wan, S. Huang, F. Yuan, S. Yang, and Y. Que, “Remote sensing image fusion based on adaptive IHS and multiscale guided filter”, IEEE Access, vol. 4, pp. 4573-4582, 2016

- Hajer Ouerghi, Olfa Mourali, Ezzeddine Zagrouba, “Non-subsampled shearlet transform based MRI and PET brain Image Fusion using simplified pulse coupled neural network and weight local features in YIQ colour space”, IETIP, Volume 12, Issue 10, Pp 1873-1880, 2018.

- Bhavana, H. K. Krishnappa, “Fusion of MRI and PET images using DWT and adaptive histogram equalization”, ICCSP, Pp 0795-0798, 2016.G. K. Matsopoulos, S. Marshall, and J. N. H. Brunt, “Multiresolution morphological fusion of MR and CT images of the human brain,” IEE Proc.-Vis., Image Signal Process., vol. 141, no. 3, pp. 137-142, Jun. 1994

- Po-Whei Huang, Cheng-I Chen, Ping Chen, Phen-Lan Lin, Li-Pin Hsu, “PET and MRI brain Image Fusion using wavelet transform with structural information adjustment and spectral information patching”, ISBB, Pp 1-4, 2014.

- Maruturi Haribabu, Ch. Hima Bindu, K. Satya Prasad, “Multimodal Medical Image Fusion of MRI-PET Using Wavelet Transform”, ICAMNCIA, Pp 127-130, 2012.

- Sabalan Daneshvar, Hassan Ghassemian, “Fusion of MRI and PET images using retina based multi-resolution transforms”, ISSPIA, Pp 1-4, 2007.

- Mehdi Sefidgar Dilmaghani, Sabalan Daneshvar, Mehdy Dousty, “A new MRI and PET Image Fusion algorithm based on BEMD and IHS methods”, ICEE, Pp 118-121, 2017.

- Hadi Fayad, Holger Schmidt, Christian Wuerslin, Dimitris Visvikis, “4D attenuation map generation in PET/MRI imaging using 4D PET derived motion fields”, NSSMIC, Pp 1-4, 2013.

- Goyal, S. Agrawal, B. S. Sohi, and A. Dogra, “Noise Reduction in MR brain image via various transform domain schemes,” Res. J. Pharmacy Technol., vol. 9, no. 7, pp. 919-924, 2016

- Mohammed Basil Abdul kareem, “Design and Development of Multimodal Medical Image Fusion using Discrete Wavelet Transform”, ICICCT, Pp 1629-1633, 2018.

- Ch Krishna Chaitanya, G Sangamitra Reddy, V. Bhavana, G Sai Chaitanya Varma, “PET and MRI medical image fusion using STDCT and STSVD”, ICCCI, Pp 1-4, 2017.

- Fahim Shabanzade, Hassan Ghassemian, “Combination of wavelet and contourlet transforms for PET and MRI image fusion”, AISP, Pp 178-183, 2017.

- Gauri D. Patne, Padharinath A. Ghonge, Kushal R. Tuckley, “Review of CT and PET image fusion using hybrid algorithm”, ICICC, Pp 1-5, 2017.

- Behzad Kalafje Nobariyan, Sabalan Daneshvar, Andia Foroughi, “A new MRI and PET image fusion algorithm based on pulse coupled neural network”, ICEE, Pp 1950-1955, 2014.

- Ebenezer Daniela, J. Anitha, K. K Kamaleshwaran, Indu Rani, “Optimum spectrum mask based medical image fusion using Gray Wolf Optimization”, Biomedical Signal Processing and Control, Vol 34 pp 36–43, 2017

- Mozhdeh Haddadpour*, Sabalan Daneshavar, Hadi Seyedarabi, “PET and MRI image fusion based on combination of 2-D Hilbert transform and IHS method” Biomedical Journal, Vol 40, No. 4, 219-225, 2017

- S. Bedi, “Image Fusion Techniques and Quality Assessment Parameters for Clinical Diagnosis: A Review” International Journal of Advanced Research in Computer and Communication Engineering Vol. 2, Issue 2, pp 1153-1157, 2013

- S. Xydeas and V. S. Petrovic, “Objective pixel-level image fusion performance measure”, Proc. SPIE, vol. 4051, pp. 89_98, Apr. 2000